Friday, 27 July 2018

Machine Learning Laboratory (15CSL76): Program 10: Locally Weighted Regression algorithm

Implement the non-parametric Locally Weighted Regression algorithm in order to

Machine Learning Laboratory (15CSL76): Program 8: EM Algorithm / k-Means algorithm

Lab Program 8:

Apply EM algorithm to cluster a set of data stored in a .CSV file. Use the same data

set for clustering using k-Means algorithm. Compare the results of these two

algorithms and comment on the quality of clustering. You can add Java/Python ML

library classes/API in the program.

Machine Learning Laboratory (15CSL76): Program 7: Bayesian network

Lab Program 7:

Write a program to construct a Bayesian network considering medical data. Use this model to demonstrate the diagnosis of heart patients using standard Heart Disease Data Set. You can use Java/Python ML library classes/API.

Machine Learning Laboratory (15CSL76): Program 6: naïve Bayesian Classifier

Lab Program 6:

Assuming a set of documents that need to be classified, use the naïve Bayesian

Classifier model to perform this task. Built-in Java classes/API can be used to write

the program. Calculate the accuracy, precision, and recall for your data set.

Machine Learning Laboratory (15CSL76): Program 2: CANDIDATE-ELIMINATION Algorithm

Lab Program 2:

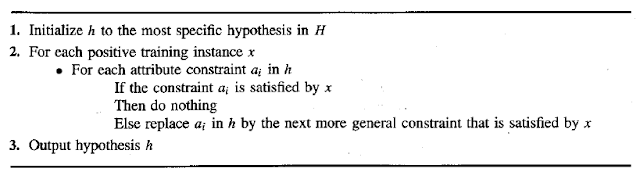

For a given set of training data examples stored in a .CSV file, implement and

demonstrate

the Candidate-Elimination algorithm to output a description of the set

of all hypotheses consistent with the training examples.

Algorithm:

Machine Learning Laboratory (15CSL76): Program 1: FIND-S Algorithm

Lab Program 1:

Implement and demonstrate the FIND-S algorithm for finding the most specific hypothesis based on a given set of training data samples. Read the training data from a .CSV file.

Algorithm:

MACHINE LEARNING LABORATORY (15CSL76)

MACHINE LEARNING LABORATORY

NOTE:

- The programs can be implemented in either JAVA or Python.

- For Problems 1 to 6 and 10, programs are to be developed without using the built-inclasses or APIs of Java/Python.

- Data sets can be taken from standard repositories(https://archive.ics.uci.edu/ml/datasets.html) or constructed by the students.

- Implement and demonstrate the FIND-S algorithm for finding the most specific hypothesis based on a given set of training data samples. Read the training data from a .CSV file.

- For a given set of training data examples stored in a .CSV file, implement and demonstrate the Candidate-Elimination algorithm to output a description of the setof all hypotheses consistent with the training examples.

- Write a program to demonstrate the working of the decision tree based ID3 algorithm. Use an appropriate data set for building the decision tree and apply this knowledge to classify a new sample.

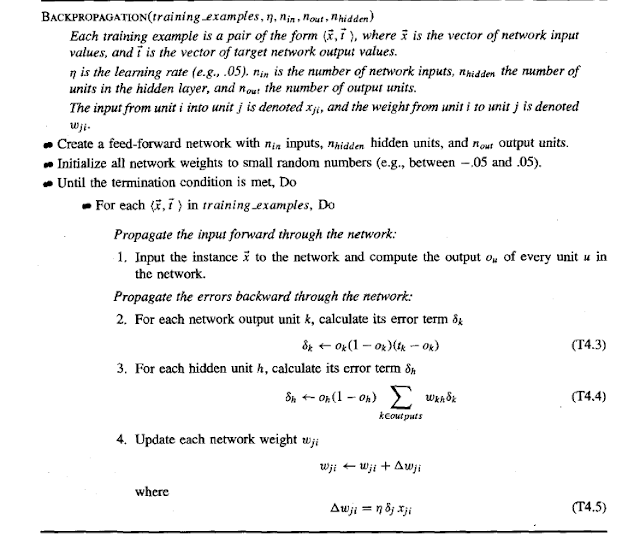

- Build an Artificial Neural Network by implementing the Backpropagation algorithm and test the same using appropriate data sets.

- Write a program to implement the naïve Bayesian classifier for a sample training data set stored as a .CSV file. Compute the accuracy of the classifier, considering fewtest data sets.

- Assuming a set of documents that need to be classified, use the naïve Bayesian Classifier model to perform this task. Built-in Java classes/API can be used to writethe program. Calculate the accuracy, precision, and recall for your data set.

- Write a program to construct a Bayesian network considering medical data. Use this model to demonstrate the diagnosis of heart patients using standard Heart DiseaseData Set. You can use Java/Python ML library classes/API.

- Apply EM algorithm to cluster a set of data stored in a .CSV file. Use the same data set for clustering using k-Means algorithm. Compare the results of these twoalgorithms and comment on the quality of clustering. You can add Java/Python MLlibrary classes/API in the program.

- Write a program to implement k-Nearest Neighbour algorithm to classify the iris data set. Print both correct and wrong predictions. Java/Python ML library classes canbe used for this problem.

- Implement the non-parametric Locally Weighted Regression algorithm in order to fit data points. Select appropriate data set for your experiment and draw graphs.

MACHINE LEARNING Syllabus (15CS73)

MACHINE LEARNING Syllabus (15CS73)

Subject Code: 15CS73 Semester: 7 Scheme: CBCS

Text Books:

- Tom M. Mitchell, Machine Learning, India Edition 2013, McGraw Hill Education

- Trevor Hastie, Robert Tibshirani, Jerome Friedman, h The Elements of Statistical Learning, 2nd edition, springer series in statistics.

- Ethem Alpaydın, Introduction to machine learning, second edition, MIT press.

Module – 1: Text Book1, Sections: 1.1 – 1.3, 2.1-2.5, 2.7

- Introduction:

- Well posed learning problems

- Designing a Learning system

- Perspective and Issues in Machine Learning.

- Concept Learning:

- Concept learning task

- Concept learning as search

- Find-S algorithm

- Version space

- Candidate Elimination algorithm

- Inductive Bias

- Decision Tree Learning:

- Decision tree representation

- Appropriate problems for decision tree learning

- Basic decision tree learning algorithm

- hypothesis space search in decision tree learning

- Inductive bias in decision tree learning

- Issues in decision tree learning

Module – 3: Text book 1, Sections: 4.1 – 4.6

- Artificial Neural Networks:

- Introduction

- Neural Network representation

- Appropriate problems

- Perceptrons

- Backpropagation algorithm

Module – 4: Text book 1, Sections: 6.1 – 6.6, 6.9, 6.11, 6.12

- Bayesian Learning:

- Introduction

- Bayes theorem

- Bayes theorem and concept learning

- ML and LS error hypothesis

- ML for predicting probabilities

- MDL principle

- Naive Bayes classifier

- Bayesian belief networks

- EM algorithm

Module – 5: Text book 1, Sections: 5.1-5.6, 8.1-8.5, 13.1-13.3

- Evaluating Hypothesis:

- Motivation

- Estimating hypothesis accuracy

- Basics of sampling theorem

- General approach for deriving confidence intervals

- Difference in error of two hypothesis

- Comparing learning algorithms

- Instance Based Learning:

- Introduction

- k-nearest neighbor learning

- locally weighted regression

- radial basis function cased-based reasoning

- Reinforcement Learning:

- Introduction

- Learning Task

- Q Learning

Subscribe to:

Comments (Atom)